DevOps in Data Science: Bridging the Gap for Enhanced Productivity

DevOps in Data Science: Bridging the Gap for Enhanced Productivity

In today’s rapidly evolving tech landscape, the ability to deploy innovations swiftly and efficiently is paramount. This is where DevOps comes into play—a set of practices that combines software development (Dev) and IT operations (Ops) to shorten the systems development life cycle while delivering features, fixes, and updates frequently in close alignment with business objectives. DevOps is not just a methodology; it’s a culture that fosters collaboration among all stakeholders from planning through production, ensuring continuous improvement and high efficiency.

The importance of DevOps in the tech industry cannot be overstated. It enables organizations to respond to market changes faster, improves the quality of software deployments, and increases the rate of successful product delivery. Furthermore, DevOps practices help in automating repetitive tasks, minimizing errors, and managing complex environments in a scalable and predictable manner.

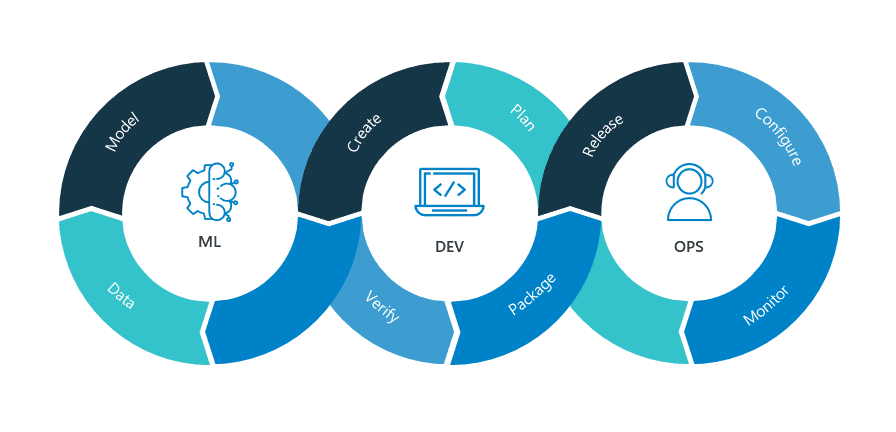

When it comes to data science projects, the integration of DevOps practices introduces a paradigm shift. Traditionally, data science has been seen as a standalone field, with models being built and refined in isolation before being handed off to engineering teams for deployment. This often leads to bottlenecks and challenges in deploying models effectively. However, by applying DevOps principles—such as automation, continuous integration, continuous deployment, and monitoring—in the realm of data science, organizations can streamline the lifecycle of data science projects from research to production. This specialized approach, often referred to as MLOps when specifically applied to machine learning projects, enhances collaboration between data scientists and operations teams, accelerates the time-to-market for new predictive models, and ensures that models perform reliably in production environments.

This blog aims to explore how DevOps can be tailored to fit data science projects, enhancing not just the speed but also the quality and reliability of data-driven decisions. We will delve into the tools, best practices, and case studies that illustrate the successful marriage of DevOps and data science, providing a blueprint for organizations looking to harness the full potential of their data science initiatives.

Understanding DevOps and Data Science

The Basics of DevOps

DevOps is built on several core principles that aim to unify development and operations teams to improve productivity and the quality of software products. Three of the foundational principles of DevOps include automation, continuous integration (CI), and continuous deployment (CD):

Automation: In DevOps, automation is employed to reduce the need for manual intervention in the software delivery process. This includes everything from automating code builds, testing, to deploying applications. Automation helps reduce the potential for errors, speeds up production processes, and allows team members to focus on more strategic tasks.

Continuous Integration (CI): CI is a practice where developers frequently merge their code changes into a central repository, after which automated builds and tests are run. The main goals of CI are to find and address bugs quicker, improve software quality, and reduce the time it takes to validate and release new software updates.

Continuous Deployment (CD): CD extends CI by automatically deploying all code changes to a testing and/or production environment after the build stage. This allows for faster feedback and ensures that there is a consistent, streamlined way to release new features and changes quickly and sustainably.

The Role of Data Science

Data Science involves extracting knowledge and insights from structured and unstructured data. It integrates various fields including statistics, scientific methods, artificial intelligence (AI), and data analysis to process and analyze large amounts of data. Here are key components:

Data Analysis: This is the process of inspecting, cleansing, transforming, and modeling data to discover useful information, informing conclusions, and supporting decision-making.

Machine Learning Models: These are algorithms that give computers the ability to automatically learn and improve from experience without being explicitly programmed. Machine learning models are used to predict outcomes based on data.

Business Implications: The insights derived from data science are pivotal for strategic decision-making. They can significantly impact business outcomes by identifying new opportunities, optimizing operations, and predicting trends.

Why Combine DevOps with Data Science?

Integrating DevOps principles with data science practices can yield significant benefits:

Faster Deployment of Models: Leveraging CI/CD pipelines in data science workflows allows for the rapid deployment of models, which accelerates the time from development to deployment and utilization in production environments.

Better Collaboration: Combining DevOps with data science breaks down silos between data scientists, developers, and IT operations, fostering better collaboration and communication. This unified approach ensures that models are scalable, maintainable, and aligned with business needs.

More Reliable Data-Driven Applications: Continuous testing and deployment facilitate the immediate detection and remediation of issues, leading to more robust and reliable applications. Moreover, monitoring the performance of models in production ensures that they continue to perform well as new data is introduced.

Through these integrations, DevOps and data science not only enhance operational efficiencies but also create a more agile and responsive data-driven culture in organizations.

Key Practices and Tools

Version Control Systems

Version control systems are fundamental in any development project, including data science. They allow multiple people to work on a project without interfering with each other’s work. Git, one of the most popular version control systems, is crucial for managing changes to code and data, facilitating collaboration, and tracking progress over time. It enables data scientists and developers to:

Track Revisions: Keep a record of who made changes, what changes were made, and when they were made, allowing for easy tracking of project evolution.

Branch and Merge: Safely experiment by creating branches, and seamlessly integrate new features through merging.

Roll Back: Revert to previous versions of the project if a problem arises with the current version.

Using Git helps ensure that all project members can work simultaneously on different aspects of a project without the risk of losing progress or overwriting each other’s contributions.

Containerization and Virtualization

Docker and Kubernetes are pivotal in managing dependencies and environments efficiently in data science projects, ensuring that applications run consistently across different computing environments:

Docker: Docker containers wrap software in a complete filesystem that contains everything needed to run: code, runtime, system tools, system libraries – anything that can be installed on a server. This guarantees that the software will always run the same, regardless of its environment.

Kubernetes: While Docker focuses on managing single containers, Kubernetes is used for managing clusters of containers, scaling them, and handling failover for containers, among other tasks. This is particularly useful for data science applications that need to scale across many instances for processing large volumes of data.

These tools help manage project complexities, reduce conflicts among team members working in different environments, and streamline deployment processes.

Continuous Integration and Deployment

For data science, Continuous Integration (CI) and Continuous Deployment (CD) extend beyond traditional software development by addressing specific challenges associated with data and models:

CI in Data Science: Involves automatically testing the data processing pipelines and model training scripts to ensure that new code commits do not break any existing functionalities.

CD in Data Science: Automates the deployment of data models to production systems. This could involve updating a machine learning model on a live environment, which needs to be done carefully to avoid performance degradation.

Implementing CI/CD pipelines in data science projects helps ensure that models are robust, accurate, and deployable at any time, thereby enabling faster and more frequent updates and enhancements.

Monitoring and Logging

Monitoring and logging are crucial for maintaining the performance of data science applications in production. Tools like Prometheus, Grafana, and ELK Stack (Elasticsearch, Logstash, and Kibana) are widely used for these purposes:

Monitoring: Tools like Prometheus can be used to collect and store metrics from live applications. Grafana integrates with Prometheus to visualize these metrics through dashboards, providing insights into the application’s performance and helping detect anomalies in real-time.

Logging: The ELK Stack is effective for managing logs generated by data science applications. Elasticsearch indexes and stores the logs, Logstash processes them, and Kibana provides powerful visualizations, making it easier to understand the logs and spot trends or issues.

Together, these tools not only help in identifying and resolving issues quickly but also play a significant role in optimizing the performance of data-driven applications over time.

Case Studies and Real-World Examples

Small Business: Leveraging DevOps in Data Science

For a small tech startup like XYZ Analytics, which provides customer behavior analytics, integrating DevOps into their data science workflow can significantly enhance their operational efficiency and product quality. Here’s how they can leverage DevOps:

Automated Testing and Deployment: By implementing Continuous Integration (CI) and Continuous Deployment (CD) pipelines, XYZ Analytics can automate the testing of their data models. Whenever a data scientist commits changes to a model, the CI/CD pipeline automatically runs tests to validate those changes and deploys them if they pass. This reduces human error and speeds up the deployment cycle, allowing XYZ to respond quickly to changes in customer behavior or requirements.

Scalable Model Serving: Using containerization tools like Docker, combined with orchestration platforms like Kubernetes, XYZ can deploy and scale their machine learning models efficiently. This setup allows them to handle varying loads of analytics requests, which is crucial during peak business hours or promotional periods for their clients.

Version Control for Experimentation: With Git, the team can manage multiple versions of models and experiments without conflict. This enables them to easily compare different model versions and roll back to previous versions if newer ones underperform, ensuring that they always deliver the best possible analytics to their clients.

Large Corporation: DevOps Practices in Large-Scale Data Science

Consider a multinational bank that uses complex models to assess credit risk. Integrating DevOps into their large-scale data science projects can address several operational challenges and bring substantial benefits:

Enhanced Collaboration Across Teams: In a large setting, DevOps practices break down silos between data scientists, software developers, and IT operations staff. For instance, when the bank updates its risk assessment models, DevOps facilitates a smoother workflow where models are quickly integrated, tested, and deployed across global operations, ensuring consistency and compliance.

Robust Compliance and Security: By integrating security into the DevOps pipeline (a practice known as DevSecOps), the bank can automate security audits and compliance checks. Tools like static code analysis integrated into the CI/CD pipeline ensure that any code or model updates comply with stringent regulatory standards before deployment, critical for banking operations.

Real-Time Monitoring and Rapid Iteration: The bank can utilize sophisticated monitoring tools to observe the performance of deployed models in real time. This enables quick iteration and refinement of models based on current performance data, reducing risks associated with credit decisions and adapting quickly to changes in the market or regulatory environment.

For both small businesses and large corporations, DevOps practices tailored to data science workflows can significantly streamline operations, enhance model quality, and increase business agility. While small businesses like XYZ Analytics benefit from rapid deployment and scalability, large corporations like multinational banks benefit from enhanced compliance, security, and global collaboration.

Challenges and Considerations

Data Security and Compliance

Integrating DevOps practices into data science projects raises several unique challenges regarding data security and compliance. The agile nature of DevOps, characterized by frequent changes and rapid deployment, can conflict with the stringent requirements of data privacy and regulatory compliance. Key concerns include:

Data Protection: Ensuring that personal and sensitive data are protected according to legal standards such as GDPR or HIPAA. This involves implementing robust encryption, data masking, and access controls.

Audit Trails: Maintaining detailed logs of data access and changes is critical for compliance. DevOps tools must integrate with security systems to provide comprehensive and immutable logs that can be reviewed during audits.

Compliance in Automation: Automating compliance checks within CI/CD pipelines ensures that every release adheres to regulatory standards. Static code analysis tools and automated compliance tests can be integrated into the deployment process.

To address these challenges, organizations need to embed security and compliance checks into their DevOps workflows from the outset, rather than treating them as afterthoughts. Additionally, employing DevSecOps practices, where security is a shared responsibility integrated into every phase of development, can help maintain the balance between rapid deployment and strict compliance requirements.

Managing Large Data Sets

Handling and versioning large data sets in a DevOps environment can be particularly challenging due to the sheer volume of data and the dynamic nature of data science projects. Key solutions include:

Data Virtualization: Instead of moving large data sets across different environments, data virtualization allows teams to access and manipulate data without replicating it, reducing storage needs and improving efficiency.

Data Version Control Systems: Tools like DVC (Data Version Control) or LakeFS help manage versions of data sets alongside code. These tools integrate with existing version control systems like Git to handle large files, models, and datasets more efficiently.

Efficient Storage Solutions: Utilizing scalable storage solutions such as object storage can help manage large volumes of data effectively. Implementing data lifecycle policies can also ensure that only relevant data is stored actively, while older, less relevant data is archived.

Implementing these tools and practices ensures that large data sets are handled efficiently, securely, and in a manner that aligns with the fast-paced nature of DevOps-driven environments.

Bridging the Skill Gap

The integration of DevOps into data science requires a blend of skills from both domains. Bridging this skill gap involves several strategic steps:

Cross-Training: Offering cross-training opportunities where data scientists learn basic DevOps practices and tools, and vice versa, can foster a deeper understanding and appreciation of each other’s roles.

Collaborative Projects: Engaging both data scientists and DevOps teams in joint projects can promote practical learning and immediate application of cross-disciplinary skills.

Mentorship Programs: Establishing mentorship programs where experienced DevOps professionals pair with data scientists and vice versa can facilitate the transfer of niche skills.

By investing in training and collaborative experiences, organizations can cultivate a workforce that is versatile, more aligned with the agile methodology of DevOps, and capable of tackling complex, data-intensive projects efficiently. This not only enhances productivity but also promotes innovation and continuous improvement in data science projects.

Future of DevOps in Data Science

As we look to the future of DevOps in data science, several emerging trends and technological advancements promise to reshape how data-driven organizations operate. Particularly, the integration of artificial intelligence (AI) in automating DevOps tasks and the continuous evolution of tools and practices are expected to bring significant changes.

AI in Automating DevOps Tasks

One of the most exciting developments is the use of AI to automate many aspects of DevOps processes, enhancing efficiency and reducing the scope for human error. Here are a few areas where AI is making an impact:

Predictive Analytics in Operations: AI can predict failures and issues by analyzing data from DevOps processes. This predictive capacity allows teams to prevent downtime and address issues before they impact the business, leading to more stable operations.

AI-Driven Code Reviews and Testing: AI tools are becoming capable of conducting code reviews and providing insights that were traditionally identified by human reviewers. Additionally, AI can automate complex test scenarios, reducing the time required for testing phases and improving code quality.

Intelligent Automation: Beyond basic automation, AI enables intelligent automation where systems can learn and adapt over time. For instance, automated systems can optimize their own performance and decision-making processes without human intervention, streamlining deployments and operational tasks.

Evolution of Tools and Practices

As AI and machine learning continue to mature, the tools and practices around DevOps in data science are also evolving:

Enhanced Collaboration Tools: Future tools will likely focus more on enhancing collaboration not just between human team members but also between humans and AI-driven systems. These tools will facilitate seamless interactions and integration of AI insights into the DevOps workflows.

Shift from CI/CD to Continuous Experimentation and Learning: The traditional model of Continuous Integration and Continuous Deployment (CI/CD) is evolving into a more dynamic model of continuous experimentation and learning, where data science models are constantly tested, updated, and improved based on ongoing feedback and learning.

Hybrid Cloud and Multi-Cloud Strategies: With the increasing adoption of cloud technologies, there is a growing trend towards using hybrid and multi-cloud environments. DevOps tools will continue to evolve to better manage these complex environments, ensuring data and application portability across different cloud platforms.

Regulation and Compliance Automation: As data privacy and compliance become increasingly important, new tools will automate these aspects within the DevOps pipeline. These tools will ensure that all data handling and processing meet the regulatory standards automatically, reducing the compliance burden on organizations.

The future of DevOps in data science is not just about speeding up the process but making it smarter, more proactive, and more aligned with business needs. With AI and new tools, DevOps is set to become an even more integral part of how data science drives business innovation and value.

Conclusion

The integration of DevOps practices into data science workflows is transforming the landscape of business analytics and decision-making. By fostering collaboration, enhancing efficiency, and promoting a culture of continuous improvement, DevOps is proving to be indispensable in helping organizations leverage their data more effectively and responsively.

Recapping the importance, here are the key benefits of integrating DevOps into data science:

Enhanced Speed and Agility: DevOps accelerates the lifecycle of data science projects from development to deployment, enabling organizations to respond more quickly to market changes and internal demands. Continuous integration and deployment ensure that data science models are iteratively improved and promptly delivered.

Improved Collaboration: Bridging the gap between data scientists, developers, and IT operations, DevOps encourages a more collaborative and transparent work environment. This unified approach not only streamlines workflows but also ensures that models are more aligned with business objectives and technical requirements.

Greater Scalability and Reliability: Through practices such as containerization and automation, DevOps helps manage complex data science applications at scale. This ensures that models are both scalable and reliable under varying operational demands, crucial for maintaining consistent performance.

Continuous Monitoring and Quality Improvement: Continuous monitoring within a DevOps framework allows teams to track the performance of data science applications in real-time, quickly identifying and addressing issues. This leads to higher quality, more reliable data-driven applications.

Innovation at the Forefront: With AI increasingly automating various DevOps tasks, the potential for innovation is expanding. AI-enhanced DevOps can lead to smarter, more predictive operational capabilities, pushing the boundaries of what data science teams can achieve in shorter time frames.

In conclusion, the integration of DevOps into data science is not just a trend but a fundamental shift in how data-driven organizations operate. It enhances not only the technical capabilities but also the strategic agility of organizations, ensuring they remain competitive and effective in utilizing their most valuable asset—data. As we look to the future, the role of DevOps in data science will only grow, becoming more intertwined with advancements in AI and cloud technologies, ultimately driving forward the next wave of business innovations.

Ready to transform your data science operations?

At Datahub Analytics, we specialize in integrating DevOps into your workflows, ensuring you can leverage the full power of your data more efficiently and effectively. Whether you’re looking to enhance collaboration across teams, streamline your data processes, or scale your operations seamlessly, our expert DevOps services and solutions are designed to get you there. Don’t let your data capabilities lag behind; contact us today to discover how our tailored DevOps strategies can help accelerate your business toward new heights of innovation and success. Contact Us to learn more and get started with Datahub Analytics.