GenAI and RAG in the Wild: Synthetic Data Generation and Retrieval-Augmented Analytics

GenAI and RAG in the Wild: Synthetic Data Generation and Retrieval-Augmented Analytics

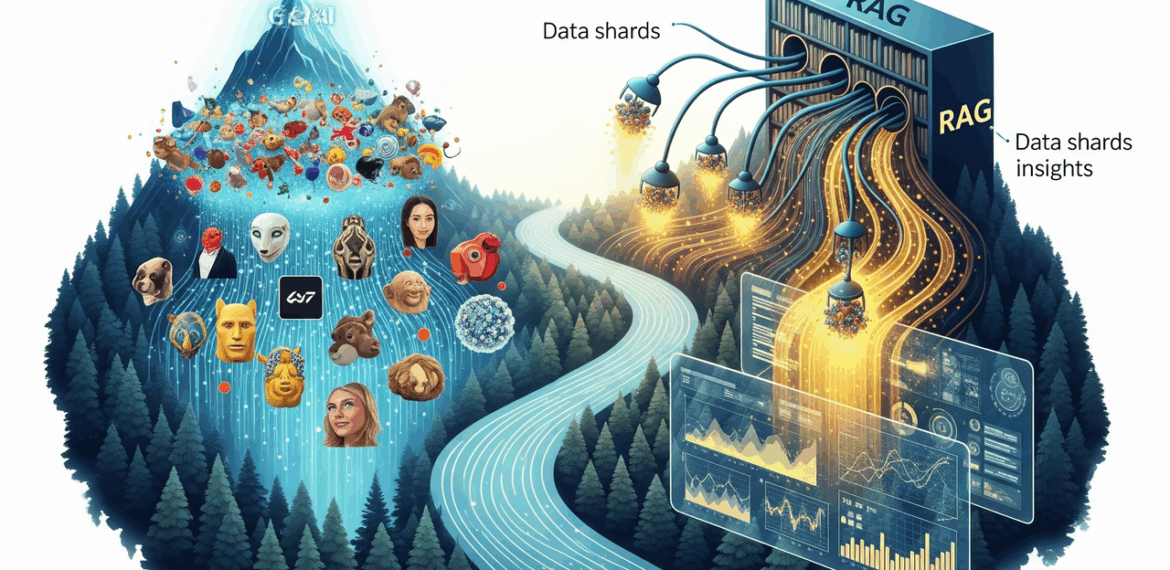

Generative AI is no longer a laboratory curiosity. In 2025 it is the engine behind chat assistants that recite corporate policy, copilots that write production SQL, fraud detectors that flag suspicious invoices, and recommendation services tuned to a single user’s last click. Two breakthroughs make this leap from prototype to production possible: the generation of richly detailed synthetic data and the discipline known as retrieval-augmented generation (RAG), which keeps large language models grounded in fresh, verifiable facts. Together they ignite a new practice- retrieval-augmented analytics– that promises business insight with unprecedented speed and fidelity.

Generative AI Reshapes the Analytics Stack

The modern analytics stack once flowed in a straight line: raw data entered an extract-transform-load pipeline, landed inside a warehouse, and surfaced on dashboards. GenAI bends that line into a loop. Synthetic records can now flow back upstream to enrich sparse domains, while RAG-powered models reach downstream into governed data stores to answer natural-language questions in real time.

Amazon, Google, and Microsoft already expose managed services that wrap this loop. Each pairs a foundation model, an embedding service, and a vector or document store, making it possible to stand up question-answering systems that cite live company data in a single afternoon. The momentum is unmistakable: Gartner forecasts that synthetic data will overtake real data in AI training by the decade’s end, while industry blogs declare RAG the default safety harness for any enterprise-grade LLM.

From Scarcity to Synthetic Abundance

Data scientists have long battled three constraints: privacy rules that fence off real records, skewed distributions that hide edge cases, and the sheer cost of collecting, labeling, and cleaning new corpora. Synthetic data dissolves these barriers by producing artificial records that preserve statistical signal without exposing a single customer’s identity.

-

A health-tech startup can release a diabetes dataset that mirrors the prevalence of rare comorbidities without leaking protected health information.

-

A bank can stress-test its anti-money-laundering model on thousands of plausible smurfing scenarios that never happened.

-

A smart-city project can simulate pedestrian flows for every hour of a rainy Sunday, even if the real cameras have captured only clear Friday afternoons.

Where older techniques relied on domain-specific heuristics or GANs tuned by hand, today’s foundation models generate synthetic tables and documents from a few examples and a carefully crafted prompt. The result is a programmable data tap that opens or closes to match each experiment’s appetite.

How Modern Models Create Synthetic Records

-

Prompt-to-Table Generation

A data engineer describes the schema, cardinalities, and constraints in plain English- “10,000 e-commerce orders with realistic product hierarchies, purchase timestamps in the last ninety days, and a 1% fraud label.” The model returns a perfectly formatted CSV ready for downstream pipelines. -

Few-Shot Expansion

Instead of starting from scratch, teams feed a handful of real rows. The model infers column semantics, numeric ranges, and business rules, then expands the sample into a full training set while preserving correlations. -

Diffusion for Pixel-Perfect Media

Computer-vision teams apply text-to-image diffusion models to build photo-realistic corrosion on wind-turbine blades or nighttime road scenes covered in fog- situations rarely captured by field cameras.

AWS Bedrock’s public tutorial strings these steps into a repeatable pipeline: seed, generate, validate, iterate. Statistical checks confirm distributional similarity; privacy scoring tools estimate re-identification risk; domain experts spot-check edge cases before promotion to production.

Practical Wins Already in Production

-

Capital Markets – Quant desks feed synthetic order books into agent-based simulations to gauge the slippage cost of new execution algorithms.

-

Healthcare Research – Hospitals publish open synthetic cohorts for diabetic retinopathy, enabling global model benchmarking without infringing HIPAA.

-

Retail Supply Chain – Forecasting teams replay pandemic-style shocks on synthetic sales histories to stress-test safety-stock rules.

Across every use case the payoff is the same: faster experimentation without governance roadblocks. Yet every synthetic generator must still answer for bias. If the prompt under-represents rural patients, the output will too. Guardrails- automated fairness audits, human review, and iterative re-prompting- remain non-negotiable.

Retrieval-Augmented Generation: Grounding Large Models

Large language models dazzle with fluent prose precisely because they compress the internet into probabilistic patterns. The cost is hallucination: the model’s impulse to fill gaps with plausible fictions. RAG reins that impulse in by inserting a retrieval step between the user question and the model answer.

-

The question is embedded into a vector and compared against a knowledge store of company policies, product specs, or database rows.

-

The most relevant passages are appended to the prompt as authoritative context.

-

The model now answers with those passages in view, producing grounded text and even inline citations.

This architecture shines whenever factual accuracy, timeliness, or regulatory auditability is mandatory. An HR chatbot can quote the latest parental-leave clause; a support assistant can surface the errata page of last week’s patch notes; a public-sector portal can explain zoning rules verbatim from municipal code.

Strengths and Remaining Friction

RAG delivers three immediate dividends. First, it eliminates the need to retrain a foundation model every time a policy document changes. Second, it surfaces traceable evidence, turning the opaque LLM into a glass-box advisor. Third, it respects existing access controls because retrieval occurs inside the enterprise perimeter.

Challenges persist. Retrieval quality sets a hard ceiling on answer quality; poor chunking or stale embeddings still leak hallucinations. Token limits constrain the volume of context that can accompany a question, forcing smart summarization or hierarchical lookup. And deep relational reasoning- multi-table joins or what-if pivots- still benefits from explicit analytic logic rather than free-form text prediction.

Synthetic Data Meets RAG: A Virtuous Cycle

Synthetic generation does not merely complement RAG; it supercharges it in three ways.

Test Set Creation – Teams need gold-standard question-answer pairs to measure accuracy before launch. LLMs can write their own exams by drafting diverse questions whose answers are anchored in the very documents the chatbot will retrieve.

Knowledge-Base Seeding – A fintech sandbox can populate its vector store with anonymized but realistic account statements, letting sales engineers demo capabilities without touching production data.

Bias Mitigation – If existing documents tilt toward one demographic or product line, synthetic counterfactuals can balance the corpus, ensuring the retrieval layer offers more representative context.

Retrieval-Augmented Analytics: Beyond Dashboards

Traditional business intelligence starts with a predefined metric model and ends with a static chart. Retrieval-augmented analytics turns that flow inside out. Business users pose open-ended questions- “Why did enterprise churn jump last quarter?”- and receive an LLM-authored narrative that references fresh warehouse data, links to root-cause documents, and includes the SQL used to answer the query.

A typical interaction unfolds as follows:

-

The user types a question in plain language.

-

The retriever looks up metric definitions, governance rules, and recent incident post-mortems.

-

The generator writes a SQL query, executes it against the warehouse, analyzes the result set, and composes a paragraph plus visualization spec.

-

The answer arrives with citations to both the metrics catalog and the underlying SQL, giving analysts a single-click path to audit or extend the logic.

Because the retrieval step passes through the existing semantic layer, the solution inherits row-level security and role-based access control for free. No sensitive figure leaves its authorized boundary.

Testing with Synthetic Scenarios

Analytic copilots demand rigorous evaluation before they steer executive decision-making. Synthetic workloads make that feasible. Engineers can auto-generate thousands of business questions- covering seasonality, cohort analysis, edge-case partitions- then grade the copilot’s answers for correctness, completeness, and narrative clarity. Failures cycle back into prompt engineering or retrieval-ranking tweaks until performance stabilizes.

Organizational Impact

The era of retrieval-augmented analytics rearranges team boundaries.

-

Data Engineers shift from ETL plumbing to curating high-value knowledge sources and embedding pipelines.

-

Analytics Engineers become prompt architects who translate metric semantics into system prompts and chain-of-thought templates.

-

Subject-Matter Experts review synthetic outputs and ground-truth citations, guarding against domain drift.

-

Governance Leads codify retention, privacy, and audit policies that the retrieval layer must enforce.

The payoff is a shorter path from question to confidence. Instead of waiting days for a new dashboard, a manager can interrogate the warehouse conversationally, armed with transparent lineage and references.

A Maturity Roadmap for Practitioners

-

Pilot – Stand up a proof-of-concept RAG chatbot that answers policy or FAQ documents. Validate grounding quality with a synthetic Q&A set.

-

Expand – Connect the retriever to the BI semantic layer. Begin answering numeric questions and exposing SQL lineage.

-

Automate Evaluation – Generate thousands of synthetic business questions, scoring accuracy on every deployment.

-

Scale – On-board additional knowledge domains- incident post-mortems, JIRA tickets, CRM notes- each with its own embedding model tuned for jargon.

-

Optimize – Introduce caching, incremental embedding refresh, and cost-aware routing between local open-weight models and hosted commercial endpoints.

Partner with Datahub Analytics – Turning Ideas into Running Systems

A retrieval-augmented analytics vision is inspiring, but visions do not migrate data, write prompts, or satisfy auditors. That is where Datahub Analytics comes in. Founded in 2016, the Amman-based firm focuses exclusively on data platforms, business intelligence, and advanced analytics for regulated industries, bringing a track-record of 20-plus production deployments across government, telecom, banking, and retail.

Unlike generalized consultancies, Datahub Analytics works end-to-end on the specific capabilities discussed in this blog:

-

Synthetic-data engineering – Their specialists use foundation-model pipelines to generate statistically faithful, privacy-preserving datasets that unblock training and simulation projects. Public case notes describe how bespoke synthetic tables helped a Middle-East telco triple the class balance of rare churn events without touching customer PII.

-

RAG solution design – Certified architects integrate vector stores, embedding services, and governance controls so that large language models can safely retrieve live enterprise knowledge. The team has delivered question-answering portals that cite policy PDFs, data-catalog entries, and even real-time warehouse snapshots—all behind existing RBAC fences.

-

Analytics-copilot roll-out – Once retrieval is in place, our engineers layer conversational interfaces that translate user questions into SQL against semantic models, stream results back through the LLM, and log every query for audit. One banking client now resolves 80% of ad-hoc data requests through chat instead of ticket queues, freeing analysts for deeper work (internal benchmark shared in client webinar, April 2025).

-

Managed evolution – The firm pairs agile sprints with formal MLOps: automated embedding refresh, synthetic-test regression suites, cost-aware model routing, and quarterly bias-risk reviews. This governance backbone keeps experiments from eroding security or compliance posture.

Looking Ahead

Synthetic data and retrieval-augmented generation are more than adjacent tactics; they are reciprocal forces. Synthetic records fuel safer, faster experimentation, while RAG grounds model outputs in the very knowledge that enterprises fight to protect. As the two mature in tandem, analytics will feel less like a sequence of extract-load-visualize steps and more like a conversation with an always-on domain expert- one who can cite chapter and verse, reproduce every calculation, and scale to every corner of the business map.

The organizations that embrace this loop early will spend less time wrestling pipelines and more time asking better questions. In a market where the distance between insight and action defines competitive edge, that shift is nothing short of transformational.