Unlocking Data Potential with Azure Data Factory: A Comprehensive Guide

Unlocking Data Potential with Azure Data Factory: A Comprehensive Guide

In today’s rapidly evolving digital landscape, data integration and transformation have become cornerstone processes for organizations aiming to harness the full potential of their data assets. As businesses generate and accumulate data at an unprecedented scale, the ability to efficiently aggregate, process, and analyze this data from diverse sources becomes critical. This is not just about keeping pace with the volume of data but also about deriving actionable insights that can drive strategic decisions and foster innovation.

Enter Azure Data Factory (ADF), Microsoft’s cloud-based data integration service, designed to orchestrate and automate data movement and data transformation. ADF empowers businesses to create data-driven workflows for orchestrating and automating data movement and data transformation across a wide range of data stores and processing services. With its visually intuitive environment, ADF simplifies the complex task of building, managing, and operating data pipelines, enabling seamless data integration and transformation at scale.

In this blog, we will delve into the expansive features and benefits that Azure Data Factory offers, providing a roadmap for businesses and IT professionals looking to leverage this powerful service. We will explore how ADF can be a game-changer in your data strategy, from simplifying ETL processes to facilitating advanced analytics and big data projects. Whether you’re new to data integration or looking to enhance your existing workflows, this blog aims to equip you with the knowledge and insights to get started with Azure Data Factory, unlocking new possibilities for your data-driven initiatives.

Understanding Azure Data Factory

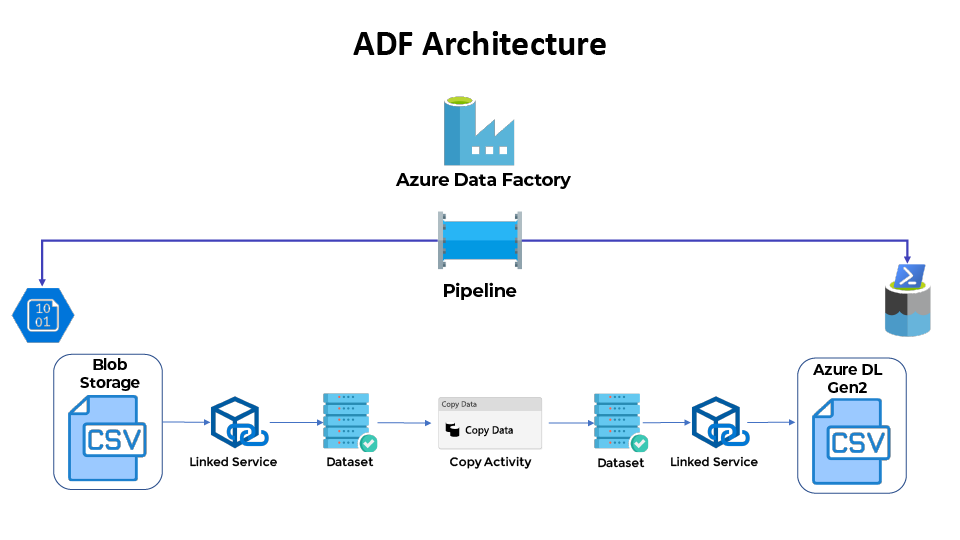

Azure Data Factory (ADF) stands at the forefront of cloud-based data integration services, offering a comprehensive solution designed to facilitate the seamless movement and transformation of data across various data stores and computing services. At its core, ADF provides a scalable, serverless data integration service that allows organizations to create, schedule, and orchestrate ETL/ELT processes with ease and precision. To understand how ADF functions and the value it brings to data integration projects, it’s essential to familiarize ourselves with its primary components and architecture.

Primary Components of Azure Data Factory

Pipelines: In ADF, pipelines are the key components that define the workflow for data movement and data processing tasks. Think of a pipeline as a logical grouping of activities that perform a unit of work. Within a pipeline, you can manage the flow of data, orchestrate multiple activities, and specify conditions for executing those activities.

Activities: Activities are the tasks performed within a pipeline. ADF supports a wide range of activities, including data copy activities, data transformation activities (using services like Azure HDInsight for Hadoop processing, Azure Data Lake Analytics, and Azure Machine Learning), and control activities for orchestrating workflow logic.

Datasets: Datasets represent the structure of the data within the data stores, serving as the inputs and outputs of activities in a pipeline. They essentially define the schema of the data you are working with, enabling ADF to understand how to read from and write to your data sources.

Linked Services: Linked services are akin to connection strings, which define the connection information needed for ADF to access external resources like data stores or compute services. They act as bridges between your ADF environment and the data sources or processing services you are utilizing in your data integration workflows.

Azure Data Factory Architecture

ADF’s architecture is designed to provide a flexible, secure, and efficient platform for data integration. It leverages Azure’s global infrastructure to ensure data can be moved and transformed securely across on-premises and cloud environments. A key aspect of ADF’s architecture is its integration runtime (IR), which provides the compute resources required to execute activities within a pipeline. The IR can run in multiple environments, including Azure, on-premises, or even on other cloud platforms, offering a highly adaptable solution for complex data integration scenarios.

Key Features of Azure Data Factory

Data Integration: ADF excels at integrating data from disparate sources, enabling organizations to create comprehensive datasets for analysis and decision-making.

ETL/ELT Capabilities: ADF supports both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes, providing flexibility in how data is processed and stored. This allows businesses to choose the approach that best fits their performance and scalability requirements.

Support for Diverse Data Sources: With ADF, you can connect to a wide array of data sources, including relational databases, NoSQL databases, file systems, and cloud services. This extensive connectivity ensures that businesses can integrate virtually any data, regardless of where it resides.

In summary, Azure Data Factory is a powerful and versatile data integration service that simplifies the creation and management of complex data pipelines. Its ability to orchestrate data movement and transformation processes, coupled with its support for a broad spectrum of data sources and computing services, makes it an essential tool for businesses looking to optimize their data integration workflows.

Why Azure Data Factory?

Azure Data Factory (ADF) stands out in the cloud-based data integration landscape for its comprehensive capabilities, designed to meet the demands of modern data-driven environments. Its distinct advantages position ADF as a compelling choice for organizations looking to optimize their data integration and transformation strategies. Here, we explore the benefits of using ADF, how it compares with other data integration tools, and real-world use cases that illustrate its impact.

Benefits of Using ADF

Scalability: ADF is built to handle data integration at scale, accommodating the needs of businesses as they grow. Whether dealing with large volumes of data or complex processing requirements, ADF can scale resources automatically, ensuring that your data workflows remain efficient and responsive to demand.

Flexibility: With support for a wide range of data sources and targets, ADF offers unparalleled flexibility in data integration scenarios. It allows organizations to integrate and transform data across various cloud-based and on-premises storage systems and services, ensuring seamless data flow across the enterprise ecosystem.

Cost-Effectiveness: ADF’s pay-as-you-go pricing model ensures that organizations only pay for the resources they use, without the need for significant upfront investments. This makes it an economically viable option for businesses of all sizes, from startups to large enterprises, enabling them to leverage powerful data integration capabilities while managing costs.

Comparison with Other Data Integration Tools

While there are numerous data integration tools available on the market, ADF distinguishes itself through its deep integration with other Azure services, such as Azure Data Lake Storage, Azure SQL Data Warehouse, and Azure Databricks. This seamless integration simplifies the development and management of data pipelines within the Azure ecosystem, providing a cohesive and efficient environment for data processing and analytics.

Moreover, ADF’s visual interface and low-code/no-code capabilities allow users to create and manage data pipelines with minimal coding, making it accessible to data professionals with varying levels of technical expertise. This contrasts with some other tools that may require more extensive programming knowledge, thus broadening the accessibility of data integration solutions.

Real-World Use Cases

Streamlining ETL Processes: Many organizations have leveraged ADF to streamline their ETL (Extract, Transform, Load) processes, enabling them to efficiently move data from various sources to a centralized data store for further analysis and reporting. This has proven particularly beneficial in scenarios involving complex data transformations and the need for real-time data availability.

Data Migration to the Cloud: ADF has facilitated seamless data migration projects for businesses transitioning from on-premises data storage solutions to cloud-based platforms. By automating the migration process, organizations can minimize downtime and ensure data integrity during the transition.

Enabling Advanced Analytics: By integrating disparate data sources and preparing data for analytics, ADF has empowered organizations to unlock advanced analytics and machine learning capabilities. This has enabled businesses to gain deeper insights into their operations, optimize processes, and drive strategic decision-making based on data-driven evidence.

Through its scalability, flexibility, and cost-effectiveness, Azure Data Factory has demonstrated its value across a variety of use cases, proving to be an indispensable tool for organizations seeking to harness the power of their data.

Getting Started with Azure Data Factory

Embarking on your journey with Azure Data Factory (ADF) is a straightforward process that unlocks a world of possibilities for your data integration needs. Here’s a simplified guide to get you up and running:

Setting Up Azure Data Factory:

- Start by navigating to the Azure Portal.

- Create a new Data Factory instance by searching for “Data Factory” and following the prompts. This step is about naming your factory, choosing a region, and setting basic configurations.

Understanding the Basics:

Once your ADF instance is ready, the real fun begins. Azure Data Factory operates on three primary concepts: Pipelines, Activities, and Datasets.

Pipelines: Consider these as the blueprints for your data integration workflows. They define what tasks are performed and in what order.

Activities: These are the tasks themselves, ranging from data copy operations to running data transformation processes.

Datasets: Representations of your data sources and destinations. They tell ADF where to get data from and where to load it.

Connecting to Data Sources:

ADF integrates with a multitude of data sources, thanks to Linked Services. These are essentially connection strings that point to your data, whether it’s in Azure, other cloud providers, or on-premises. Creating a Linked Service is as easy as selecting your data source and providing the necessary credentials and connection details.

Tips for Success:

- Start Small: Don’t try to boil the ocean on your first go. Begin with simple pipelines and gradually introduce complexity.

- Explore Templates: Azure Data Factory offers templates for common data integration patterns. These can be a great starting point and learning aid.

- Leverage Integration Runtimes: For data sources outside Azure, consider using Integration Runtimes to securely access and process your data.

By following these steps, you’ll set a strong foundation for your data integration projects with Azure Data Factory. Remember, the key is to experiment, learn, and iterate.

Advanced Features and Best Practices in Azure Data Factory

Azure Data Factory (ADF) not only simplifies the process of data integration and transformation but also comes equipped with advanced features designed to cater to complex data processing needs. Here’s a look at some of these advanced capabilities and best practices for leveraging ADF effectively.

Advanced Features:

Data Flows: A powerful feature in ADF, data flows allow for visually designing data transformations without writing code. There are two types:

Mapping Data Flows: Ideal for complex ETL processes, mapping data flows enable you to design data transformations visually. They support a wide range of transformation activities such as joining, aggregating, and sorting data.

Wrangling Data Flows: Based on Power Query, wrangling data flows are designed for data preparation tasks. They are particularly useful for data engineers and analysts looking to clean and model data interactively before it’s loaded into a data warehouse or for analytics.

Monitoring and Managing Pipelines:

Performance Monitoring: Azure Data Factory provides built-in monitoring tools and dashboards to track the performance of your pipelines. It’s crucial to regularly review these metrics to ensure your data processes are running smoothly and efficiently.

Alerts and Notifications: Setting up alerts for critical events or failures in your pipelines can help you respond quickly to issues. ADF allows you to configure email notifications or integrate with Azure Monitor for comprehensive monitoring.

Best Practices:

Incremental Loads: For large datasets, consider implementing incremental data loads rather than full refreshes. This approach minimizes data movement and processing time, making your pipelines more efficient.

Parameterization: Make your pipelines reusable and flexible by parameterizing datasets, linked services, and activities. This practice allows for easier adjustments and scaling of your data processes.

Error Handling: Design your pipelines with robust error handling to manage failures gracefully. Use retry policies, logging, and conditional activities to handle exceptions and ensure data integrity.

Security Practices: Secure your data by leveraging ADF’s integration with Azure Active Directory and by encrypting data in transit and at rest. Always follow the principle of least privilege when assigning access to resources.

Continuous Integration and Delivery (CI/CD): Implement CI/CD practices for your ADF pipelines to automate the deployment process and maintain higher standards of reliability and quality control.

By diving into Azure Data Factory’s advanced features and adhering to these best practices, you can design efficient, scalable, and maintainable data integration solutions that propel your organization forward in its data journey.

Future of Azure Data Factory

As we venture further into the data-driven era, the landscape of data integration continues to evolve, bringing new challenges and opportunities. Azure Data Factory (ADF) stands at the forefront of this evolution, constantly adapting and innovating to meet the growing needs of businesses and data professionals. Let’s explore what the future might hold for ADF and the trends shaping data integration.

Future Developments and Trends:

Increased AI and Machine Learning Integration: The integration of AI and machine learning capabilities within ADF is expected to deepen, enabling more intelligent and automated data processing workflows. This could mean advanced data quality checks, predictive transformations, and automated decision-making processes within pipelines.

Enhanced Real-time Data Processing: As businesses demand faster insights, ADF is likely to enhance its real-time data processing and streaming capabilities. This would allow for more dynamic data pipelines capable of handling streaming data sources and providing real-time analytics.

Greater Emphasis on Data Governance and Compliance: With data privacy and compliance becoming increasingly important, ADF will likely introduce more robust data governance tools. These tools will help organizations manage data lineage, cataloging, and policy enforcement directly within their data integration workflows.

Expansion of Data Source and Destination Connectors: As the variety of data sources and destinations continues to expand, ADF will likely grow its library of connectors. This expansion will facilitate easier integration with new and emerging data stores, cloud services, and SaaS applications.

Potential New Features and Enhancements:

Low-code/No-code Development Environments: To make data integration more accessible, ADF might introduce more low-code/no-code options for designing and deploying data pipelines. This approach would lower the barrier to entry for business analysts and other non-technical users.

Advanced Monitoring and Analytics: Future versions of ADF could offer more sophisticated monitoring and analytics tools, providing deeper insights into pipeline performance, data flow patterns, and optimization opportunities.

Collaborative Data Integration Workflows: Recognizing the importance of collaboration in data projects, ADF may introduce features that support team-based development and collaboration on data integration tasks, including shared workspaces and version control integration.

Sustainability Considerations: With the growing focus on sustainability, ADF could incorporate features to help organizations measure and minimize the carbon footprint of their data integration activities, aligning with broader environmental goals.

As Azure Data Factory continues to evolve, it remains a critical tool in the data professional’s arsenal, adeptly navigating the shifting sands of data integration needs. The future of ADF looks promising, with ongoing innovations that will empower organizations to unlock the full potential of their data assets in more efficient, secure, and sustainable ways.

Conclusion

In this blog, we’ve taken a comprehensive look at Azure Data Factory (ADF), from its fundamental components and benefits to getting started and leveraging advanced features for efficient, scalable, and maintainable data integration solutions. We’ve also peeked into the promising future of ADF, highlighting potential enhancements that will continue to make it an indispensable tool in the evolving landscape of data integration.

Azure Data Factory stands out as a robust, flexible, and cost-effective solution for data integration needs, enabling organizations to harness the full potential of their data assets. With its continuous evolution and adaptability, ADF is well-equipped to meet the challenges of modern data integration, offering a platform that supports a wide range of data sources, complex ETL processes, and real-time data processing.

As we conclude this exploration of Azure Data Factory (ADF), remember that navigating the complexities of data integration can be daunting. This is where our expertise comes into play. Datahub Analytics specializes in providing tailored ADF services, designed to streamline your data workflows and unlock actionable insights from your data assets. Leveraging our deep understanding of ADF and its capabilities, we ensure your organization achieves seamless data integration and transformation, positioning you for success in today’s data-driven landscape. Reach out to us to elevate your data strategy with Azure Data Factory.